Abstract

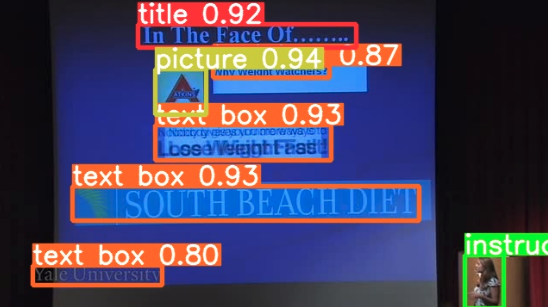

Educational content is increasingly available online. The most common media types for such content are audio and video. Many of these educational video are unprocessed -- they are simply captured with a camera and then uploaded onto video servers for viewers to watch. We believe that automatic video analysis that recovers the structure of educational videos would allow learners and creators to fully exploit the semantics of the captured content. In this paper, we outline our research plan towards better semantic understanding of educational videos. We highlight our work done so far: (i) we extended the FitVid Dataset to exploit the semantics of any type of learning video; and (ii) we improved upon existing state-of-the-art slide classification techniques.