About me

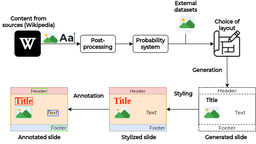

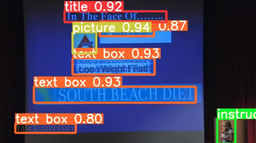

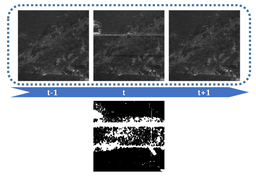

My research focuses on making lecture videos more navigable, interactive, and accessible by leveraging multimodal analysis. This means creating systems that can simultaneously process and understand the different streams of information present in a video: the visuals from slides or a blackboard, the text from spoken transcripts, and the content extracted from slides using OCR.

To bring these ideas to life, I designed and built an interactive application that automatically structures video content and enhances the user experience by linking these different information sources.

Interests

- Multimedia

- Vision

- Deep Learning

- Large Language Model

- NLP

Education

IRIT, Toulouse, France

NUS/IPAL, Singapore

ENSEEIHT