Abstract

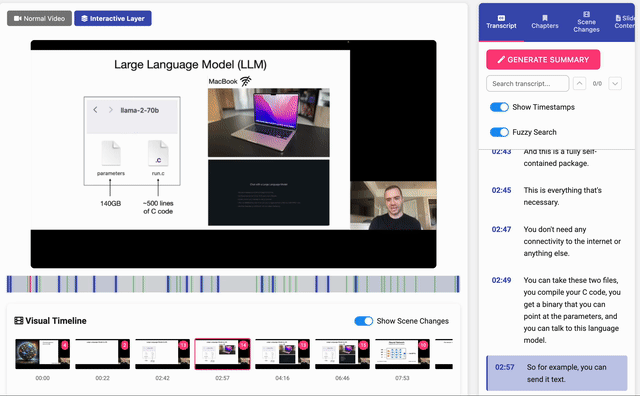

The growth of online educational content, particularly slide-based video lectures, has created a need for tools that enhance navigation, comprehension, and accessibility. Many existing systems for video analysis are closed-source, hindering reproducibility and extension. To address this, we present the Lecture Video Analysis Toolkit, an open-source, proof-of-concept application designed for the multimodal analysis of slide video lectures. The toolkit integrates a processing pipeline that includes scene detection, visual entity extraction, transcription, optical character recognition (OCR), and semantic linking between spoken and visual content using embeddings. A key contribution is its interactive interface, motivated by early user feedback, that allows for customization of the viewing experience to suit individual preferences. The entire system is openly available and serves as a research prototype for validating the potential of multimodal analysis in creating more inclusive and improved learning experiences.